If you don’t have root access on a particular GNU/Linux system that you use, or if

you don’t want to install anything to the system directories and potentially

interfere with others’ work on the machine, one option is to build your

favourite tools in your $HOME directory.

This can be useful if there’s some particular piece of software that you really need for whatever reason, particularly on legacy systems that you share with other users or developers. The process can include not just applications, but libraries as well; you can link against a mix of your own libraries and the system’s libraries as you need.

Preparation

In most cases this is actually quite a straightforward process, as long as

you’re allowed to use the system’s compiler and any relevant build tools such

as autoconf. If the ./configure script for your application allows

a --prefix option, this is generally a good sign; you can normally test this

with --help:

$ mkdir src

$ cd src

$ wget -q http://fooapp.example.com/fooapp-1.2.3.tar.gz

$ tar -xf fooapp-1.2.3.tar.gz

$ cd fooapp-1.2.3

$ pwd

/home/tom/src/fooapp-1.2.3

$ ./configure --help | grep -- --prefix

--prefix=PREFIX install architecture-independent files in PREFIX

Don’t do this if the security policy on your shared machine explicitly disallows compiling programs! However, it’s generally quite safe as you never need root privileges at any stage of the process.

Naturally, this is not a one-size-fits-all process; the build process will vary for different applications, but it’s a workable general approach to the task.

Installing

Configure the application or library with the usual call to ./configure, but

use your home directory for the prefix:

$ ./configure --prefix=$HOME

If you want to include headers or link against libraries in your home

directory, it may be appropriate to add definitions for CFLAGS and LDFLAGS

to refer to those directories:

$ CFLAGS="-I$HOME/include" \

> LDFLAGS="-L$HOME/lib" \

> ./configure --prefix=$HOME

Some configure scripts instead allow you to specify the path to particular

libraries. Again, you can generally check this with --help.

$ ./configure --prefix=$HOME --with-foolib=$HOME/lib

You should then be able to install the application with the usual make and

make install, needing root privileges for neither:

$ make

$ make install

If successful, this process will insert files into directories like $HOME/bin

and $HOME/lib. You can then try to call the application by its full path:

$ $HOME/bin/fooapp -v

fooapp v1.2.3

Environment setup

To make this work smoothly, it’s best to add to a couple of environment

variables, probably in your .bashrc file, so that you can use the home-built

application transparently.

First of all, if you linked the application against libraries also in your home

directory, it will be necessary to add the library directory to

LD_LIBRARY_PATH, so that the correct libraries are found and loaded at

runtime:

$ /home/tom/bin/fooapp -v

/home/tom/bin/fooapp: error while loading shared libraries: libfoo.so: cannot open shared...

Could not load library foolib

$ export LD_LIBRARY_PATH=$HOME/lib

$ /home/tom/bin/fooapp -v

fooapp v1.2.3

An obvious one is adding the $HOME/bin directory to your $PATH so that you

can call the application without typing its path:

$ fooapp -v

-bash: fooapp: command not found

$ export PATH="$HOME/bin:$PATH"

$ fooapp -v

fooapp v1.2.3

Similarly, defining MANPATH so that calls to man will read the manual for

your build of the application first is worthwhile. You may find that $MANPATH

is empty by default, so you will need to append other manual locations to it.

An easy way to do this is by appending the output of the manpath utility:

$ man -k fooapp

$ manpath

/usr/local/man:/usr/local/share/man:/usr/share/man

$ export MANPATH="$HOME/share/man:$(manpath)"

$ man -k fooapp

fooapp (1) - Fooapp, the programmer's foo apper

This done, you should be able to use your private build of the software

comfortably, and all without never needing to reach for root.

Caveats

This tends to work best for userspace tools like editors or other interactive

command-line apps; it even works for shells. However this is not a typical use

case for most applications which expect to be packaged or compiled into

/usr/local, so there are no guarantees it will work exactly as expected.

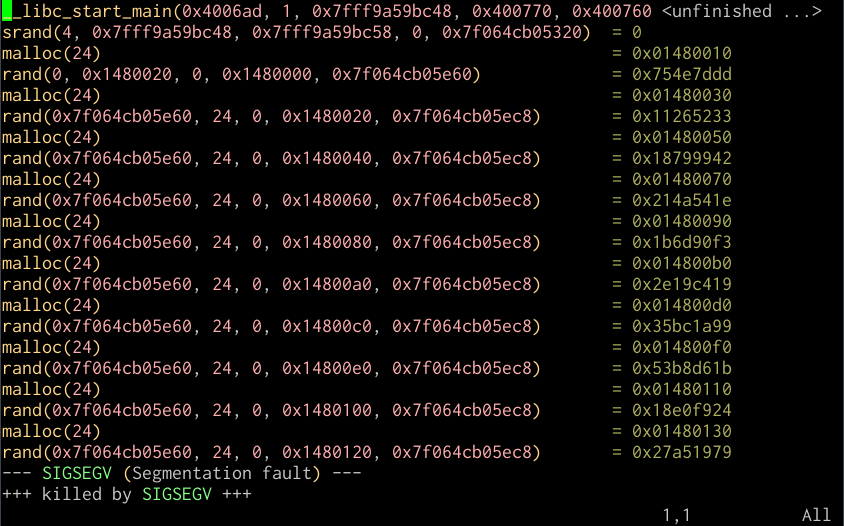

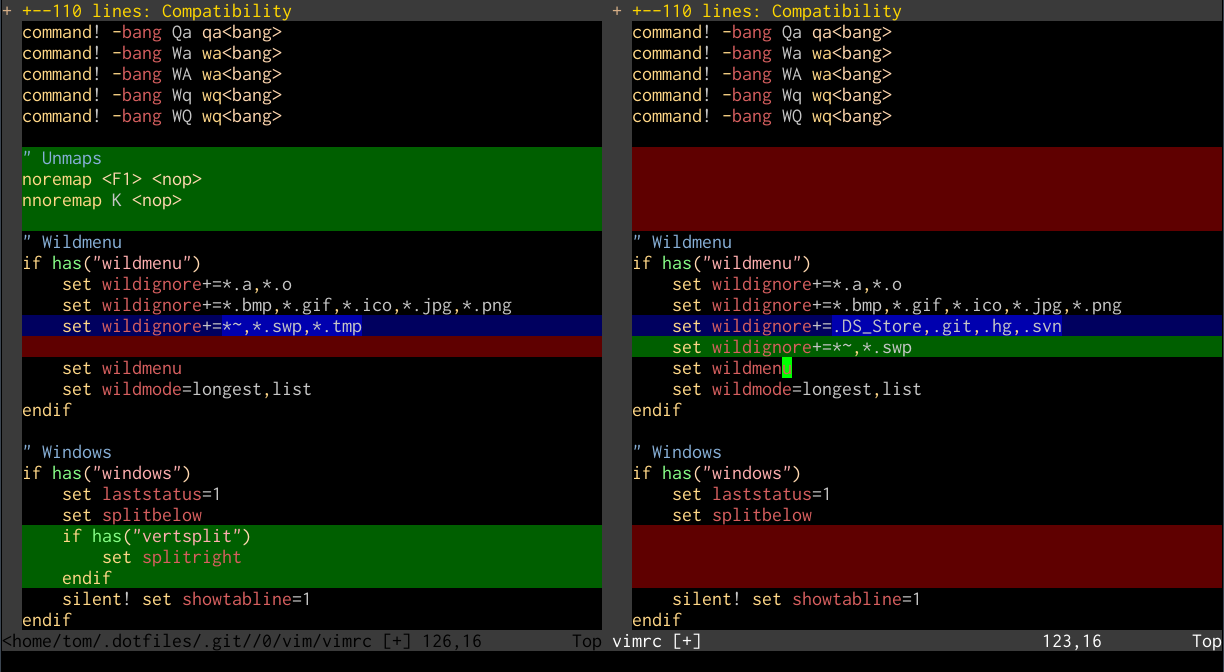

I have found that Vim and Tmux work very well like this, even with Tmux linked

against a home-compiled instance of libevent, on which it depends.

In particular, if any part of the install process requires root privileges,

such as making a setuid binary, then things are likely not to work as

expected.